Imagine a world where machines can diagnose diseases better than doctors, create art that moves us to tears, and predict stock market movements with uncanny accuracy. This isn’t science fiction—it’s the reality neural networks are building today. And if you’re not paying attention, you’re about to be left behind in the technological revolution.

Introduction: Why Your Future Depends on Understanding Neural Networks

In 2024, neural networks have moved from academic curiosity to the engine room of modern civilization. They’re the secret sauce behind everything from ChatGPT’s conversational brilliance to Tesla’s self-driving cars. But here’s the uncomfortable truth: understanding neural networks is no longer optional for anyone in tech, business, or frankly, modern society.

By the end of this deep dive, you’ll not only grasp how these digital brains work but also see why they’re fundamentally changing what’s possible in our world. More importantly, you’ll understand the risks of ignoring this technology—because while you’re reading this, someone else is using neural networks to automate your job, outcompete your business, or reshape your industry.

Background: From Biological Inspiration to Digital Revolution

The Humble Beginnings

The story starts in 1943, when neurophysiologist Warren McCulloch and mathematician Walter Pitts created the first mathematical model of a neuron. Their insight was profound: brain cells could be modeled as simple computational units. This was the intellectual equivalent of discovering fire—simple in concept, but revolutionary in implication.

Key Historical Milestones:

- 1943: McCulloch-Pitts neuron (binary logic gates)

- 1958: Frank Rosenblatt’s Perceptron (first learning algorithm)

- 1986: Backpropagation revolution (enabling multi-layer networks)

- 2012: AlexNet breakthrough (deep learning goes mainstream)

The Biological Blueprint

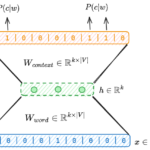

Think of a neural network as a digital approximation of your brain’s neural circuitry. Just as your brain learns from experience, neural networks learn from data. The fundamental building block—the artificial neuron—mirrors its biological counterpart:

Biological Neuron → Artificial Neuron

Dendrites → Input connections

Cell body → Summation function

Axon → Output connection

Synapses → Weights (learnable parameters)

This isn’t just poetic analogy—it’s functional mimicry that’s proven astonishingly effective.

Core Concepts: How Neural Networks Actually Work

The Basic Architecture: Layers Upon Layers

A neural network consists of three fundamental components:

- Input Layer: Where data enters (like your senses)

- Hidden Layers: Where computation happens (your brain’s processing)

- Output Layer: Where results emerge (your actions/decisions)

The Mathematics of Learning

At its core, every neural network performs two fundamental operations:

Forward Propagation:

# Simplified neuron computation

def neuron_output(inputs, weights, bias):

weighted_sum = sum(input * weight for input, weight in zip(inputs, weights))

return activation_function(weighted_sum + bias)

Backward Propagation (The Learning Magic):

This is where neural networks earn their keep. Through backpropagation, the network adjusts its weights based on prediction errors, gradually improving its performance—much like how you learn from mistakes.

Activation Functions: The Decision Makers

Different activation functions serve different purposes:

- Sigmoid: Classic choice (0 to 1 range)

- ReLU: Modern favorite (simple, effective)

- Tanh: Symmetric alternative (-1 to 1 range)

Why ReLU dominates: It’s computationally efficient and helps mitigate the vanishing gradient problem—a critical issue in deep networks.

Practical Applications: Where Neural Networks Are Changing the Game

Healthcare Revolution

Neural networks are diagnosing diseases with superhuman accuracy. In radiology, they’re spotting tumors that human eyes miss. In drug discovery, they’re predicting molecular interactions that would take years to test experimentally.

Real Impact: Google’s DeepMind developed AlphaFold, which solved the 50-year-old protein folding problem—potentially accelerating drug discovery by decades.

Financial Markets

Hedge funds use neural networks to detect subtle patterns in market data that human analysts can’t perceive. The result? Trading algorithms that consistently outperform human traders.

Risk Factor: This creates an arms race where those without AI capabilities are essentially bringing knives to gunfights.

Creative Industries

From AI-generated art to music composition, neural networks are demonstrating creative capabilities that challenge our definitions of artistry. DALL-E and Midjourney are just the beginning.

Implementation Example: Building Your First Neural Network

Let’s create a simple neural network for image classification using Python and TensorFlow:

import tensorflow as tf

from tensorflow.keras import layers, models

# Build the model

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

history = model.fit(train_images, train_labels,

epochs=10,

validation_data=(test_images, test_labels))

What’s happening here:

- Conv2D layers: Extract features from images

- MaxPooling: Reduces dimensionality while preserving important features

- Dense layers: Make final classifications

- Softmax activation: Converts outputs to probabilities

This simple network can achieve over 95% accuracy on MNIST digit recognition—demonstrating the power of even basic neural architectures.

Challenges & Pitfalls: The Dark Side of Neural Networks

The Black Box Problem

Neural networks are often “black boxes”—we know they work, but we don’t always understand why. This creates serious issues in critical applications like healthcare and autonomous vehicles.

My strong opinion: Until we solve interpretability, we’re building cathedrals on sand. The current “it works, trust me” approach is dangerously naive.

Data Dependency

Neural networks are data-hungry monsters. They require massive datasets to learn effectively, which creates barriers for smaller organizations and raises privacy concerns.

Computational Costs

Training state-of-the-art models requires computational resources that rival small countries’ energy consumption. The environmental impact is non-trivial.

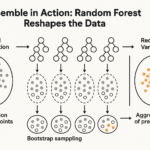

Overfitting: The Siren’s Call

Neural networks can memorize training data perfectly while failing on new examples. It’s like a student who memorizes answers without understanding concepts.

# Signs of overfitting

training_accuracy = 0.99 # Perfect on training data

validation_accuracy = 0.65 # Poor on new data

Future Outlook: Where We’re Headed

Neuromorphic Computing

The next frontier involves building hardware that mimics the brain’s architecture more closely. Instead of traditional CPUs, we’re moving toward chips that process information more like biological neurons.

Explainable AI (XAI)

The research community is racing to develop techniques that make neural networks more interpretable. This isn’t just academic—it’s essential for regulatory approval and public trust.

Edge Computing

The future involves running neural networks on devices (phones, cars, IoT devices) rather than in the cloud. This enables real-time processing while preserving privacy.

Philosophical Implications

We’re approaching a point where neural networks may develop emergent capabilities we don’t fully understand or control. The ethical questions are becoming as important as the technical ones.

Conclusion: The Intelligence Revolution Is Here

Neural networks represent the most significant technological advancement since the internet. They’re not just tools—they’re fundamentally new ways of processing information and solving problems.

The key insight: Neural networks work because they approximate the most powerful computing system we know—the human brain. But unlike biological brains, they can be scaled, replicated, and specialized indefinitely.

As the great rock philosopher Neil Young once sang, “It’s better to burn out than to fade away.” In the context of neural networks, this translates to: It’s better to understand and shape this technology than to be shaped by it.

The neural network revolution isn’t coming—it’s already here. The question isn’t whether you’ll be affected, but whether you’ll be a driver or passenger.

References & Further Reading

- Foundational Papers:

- McCulloch, W.S., & Pitts, W. (1943). “A logical calculus of the ideas immanent in nervous activity”

- Rumelhart, D.E., Hinton, G.E., & Williams, R.J. (1986). “Learning representations by back-propagating errors”

- Modern References:

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). “Deep Learning”

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). “Deep learning” (Nature)

- Practical Resources:

- TensorFlow Documentation

- PyTorch Tutorials

- Fast.ai Practical Deep Learning Course

Call to Action

Ready to dive deeper? Here’s your next step:

- Experiment: Run the code example above on Google Colab (it’s free)

- Learn: Pick one application area that interests you and build a simple project

- Discuss: Share your thoughts or questions in the comments below

- Stay Updated: Follow leading researchers like Yann LeCun, Andrew Ng, and Fei-Fei Li

Share this article with someone who needs to understand why neural networks matter. Because in the age of AI, ignorance isn’t just bliss—it’s professional suicide.

What will you build with this knowledge? The future is waiting.

Leave a Reply